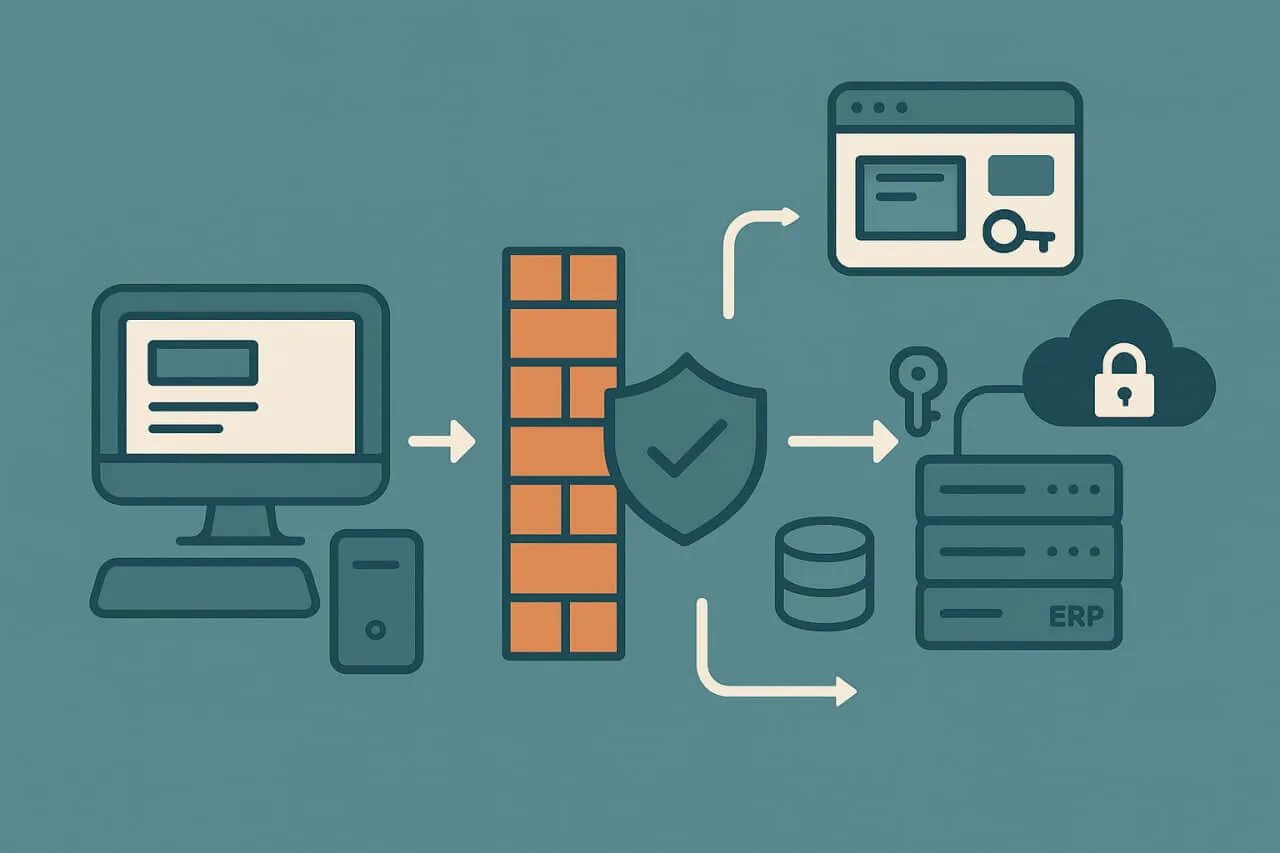

These internal systems—SAP, Oracle, Microsoft Dynamics, homegrown ERPs, HR and payroll platforms—keep the business running. They’re also invisible to most monitoring tools because they sit behind VPNs, firewalls, and complex authentication layers. When something slows down or fails, users feel it first and operations teams hear about it later.

Synthetic monitoring closes that gap, giving enterprises a way to simulate user transactions even when the application isn’t reachable from the public web. With the right architecture—private agents, secure credential management, and workflow-level scripting—you can test internal systems as rigorously as customer-facing sites, without compromising security.

Why Internal Applications Are Hard to Monitor

Synthetic monitoring was born in the era of public websites. Tools launched from cloud nodes, visited URLs, and measured availability. But internal applications live in a different world—segmented networks, legacy technologies, and enterprise authentication stacks that resist automation.

ERP systems like SAP and Oracle aren’t a single application, they’re ecosystems. A seemingly simple “Submit Invoice” action might traverse web front ends, middleware, databases, and identity providers. A delay in any layer can cripple an entire business process. Yet these layers are typically walled off from external agents, meaning conventional monitoring nodes can’t even reach them.

Then comes authentication. Many enterprise apps use SSO tied to Active Directory, Kerberos tickets, or SAML assertions. Others rely on smartcards, hardware tokens, or conditional MFA that synthetic scripts can’t complete. Add Citrix or VMware desktops on top, and you’ve got multiple layers of virtualization separating the monitoring tool from the actual applications.

All of this makes internal systems the hardest and most important to monitor. They demand the precision of synthetic testing but the discretion of a security-compliant architecture.

The Blind Spot Problem in Enterprise Monitoring

Most organizations already run some form of monitoring. APM tools capture traces and logs. Infrastructure monitors watch CPU and memory. But those tools observe systems from the inside. They know a process is running, not whether a workflow works.

Imagine a manufacturing company using SAP Fiori for production orders. A database patch introduces a subtle latency spike, and transactions that once completed in two seconds now take twelve. APM shows normal resource usage, and network tools see healthy connectivity. Only a synthetic transaction—one that logs in, navigates the workflow, and measures the end-to-end time—would catch it.

That’s the blind spot synthetic monitoring eliminates. By simulating user journeys, it provides an “outside-in” perspective on performance that infrastructure metrics can’t reveal. When applied to internal applications, it turns invisible bottlenecks into actionable data.

Architecting Synthetic Monitoring for Private Networks

Deploying Private Monitoring Agents

The cornerstone of internal monitoring is the private agent. Instead of launching tests from a public cloud, you deploy lightweight agents inside your secure network—within the VPN, in a data center, or in a cloud VPC connected via private link.

These agents execute scripted tests locally, then send only results and metrics back to the monitoring platform over an outbound, encrypted connection. No inbound exposure, no open firewall ports. From the security team’s perspective, it’s just another trusted process making outbound telemetry calls.

Placement matters. Agents in different subnets or regions can reveal latency between sites or replication delays across internal services. Treat them as you would global monitoring nodes—just inside your perimeter.

Handling Authentication and SSO

Authentication is where most internal monitoring projects stall. The safest approach is dedicated test accounts—credentials limited to synthetic workflows, stored in a secure vault, and rotated automatically.

For SSO, there are two viable patterns. One is scripting a full login flow through the identity provider, simulating a browser session end to end. The other is using pre-authenticated tokens or delegated cookies that represent a valid session without repeating the login on every run. The choice depends on whether you’re testing login reliability or post-login performance.

Never log credentials in plaintext, never embed them in scripts, and never reuse production accounts. Treat test credentials as code—versioned, rotated, and auditable.

Monitoring Through Virtualized Layers

Many internal systems are only reachable through remote desktops. Citrix, VMware Horizon, or terminal emulators wrap the actual application in another interface. Modern synthetic tools can interact with those environments using image recognition or DOM-based scripting within the virtual session.

While slower to execute, these checks are invaluable. They test what real users see, not just what APIs report.

Integrating with Enterprise Security Controls

Synthetic monitoring shouldn’t punch holes in your defenses. Instead, it should align with them. Use IP allowlisting, TLS inspection policies, and existing logging frameworks to keep monitoring compliant. All communication between agents and cloud services should be outbound-only, over encrypted channels, and visible in your SIEM.

With those guardrails, security teams stop viewing monitoring as a risk and start seeing it as a control.

Use Cases: SAP and Other ERP Platforms

SAP (S/4HANA, NetWeaver, Fiori)

SAP is the poster child for internal-system complexity. A complete transaction touches multiple components: the Fiori web front end, application servers, and the underlying HANA database.

Synthetic monitoring can model those workflows step by step—logging into Fiori, navigating to a purchase order module, submitting a transaction, and verifying confirmation screens or API responses. By running these checks from on-prem agents, teams get latency and availability metrics that reflect the real experience of internal users.

For SAP GUI or legacy NetWeaver transactions, scripting tools can interact at the protocol level (HTTP, RFC, OData) to simulate key calls without relying on visual automation. This makes tests more stable while still reflecting actual transaction logic.

Oracle ERP and Microsoft Dynamics

Oracle ERP Cloud introduces hybrid visibility challenges: part of the stack is SaaS, part still runs internally. Synthetic monitoring from both public and private agents ensures end-to-end coverage. You can validate login times, dashboard performance, and integration with on-prem data stores.

Microsoft Dynamics 365 follows a similar model. Its authentication chain often involves Azure AD and corporate SSO. Scripts can reuse tokens or credentials issued by test tenants to validate business processes like lead creation or purchase approvals without disrupting production users.

Custom or Legacy ERP Portals

Not every enterprise company runs a commercial suite. Many maintain decades-old web portals or mainframe front-ends with HTML wrappers. These systems benefit most from synthetic checks: they have minimal built-in monitoring, unpredictable performance, and enormous business impact.

Scripts can log in, verify key workflows, and confirm integrations with downstream systems—HR, payroll, or supply chain APIs. The goal isn’t exhaustive coverage, it’s ensuring that the workflows people rely on every day still complete successfully.

Overcoming Common Challenges

Network and Firewall Constraints

Internal networks often block outbound automation by design. The fix is architectural: deploy agents that communicate outward through approved channels. They act as local proxies, executing tests inside and forwarding only metrics outside.

For hybrid visibility, combine both approaches—cloud nodes for public endpoints, private agents for internal paths. This blended view captures full user journeys from internet to intranet.

Authentication and Session Expiry

Enterprise sessions expire frequently for security reasons. Synthetic scripts must handle that gracefully—detecting session expiry, triggering a fresh login, and recovering without human intervention. Secrets management tools or dynamic token exchanges keep credentials safe while enabling continuity.

Screenshots and PII Exposure

Some synthetic tools capture screenshots or recordings for debugging. In internal environments, those images may contain confidential data—names, invoices, employee records. Configure agents to mask sensitive regions, blur screenshots, or disable capture entirely. Store results in restricted repositories and apply short retention policies.

Managing Test Accounts and Data

Test accounts should mirror real users’ permissions but operate in isolation. Limit their access, prevent them from triggering actual transactions (like financial postings), and purge test data regularly. This keeps ERP databases clean and avoids skewing analytics with synthetic noise.

How Synthetic Monitoring Adds Value Internally

Internal synthetic monitoring isn’t just about catching outages. It builds operational confidence across departments.

- End-to-end validation verifies that business workflows—from order creation to invoice approval— complete without errors.

- Proactive detection flags login or latency issues before employees notice.

- SLA assurance provides measurable uptime and performance metrics for internal IT services.

- Change impact visibility compares before-and-after results following system updates or infrastructure changes.

- Capacity planning identifies patterns in response times and queue behavior that inform scaling decisions.

Each of these benefits compounds over time. As organizations modernize their ERP stacks, synthetic data becomes a baseline for every deployment or patch cycle—proof that critical workflows still function under new conditions.

Implementing with Dotcom-Monitor

Building this infrastructure in-house is possible, but maintaining it isn’t trivial. That’s where managed platforms like UserView come into play.

UserView supports private agents that run within secure networks, allowing enterprises to script and execute synthetic transactions against internal web portals or ERP front-ends. Credentials are stored securely, sessions can be reused or renewed automatically, and data never leaves the environment unencrypted.

For SAP, Dynamics, and other enterprise systems, the visual scripting interface lets teams build real-user workflows—navigating dashboards, submitting forms, and validating results—without writing extensive code. Reports consolidate availability, latency, and error data into a single pane of glass, integrating easily with existing observability stacks.

The advantage isn’t just convenience. It’s control. Our hybrid model means you can monitor public APIs, customer portals, and deep internal systems using the same framework—consistent metrics, unified alerting, and blind spots.

Future Outlook: Monitoring in Hybrid IT Environments

As enterprises migrate parts of their ERP ecosystems to the cloud, the boundary between internal and external monitoring blurs. An SAP S/4HANA instance might run in a managed cloud, connected to on-prem middleware through VPN tunnels. Synthetic monitoring must evolve accordingly—agents at both ends, validating the chain from user interface to backend service.

Zero-trust architectures will reinforce this shift. Monitoring agents will authenticate just like users, with scoped tokens and least-privilege policies. Synthetic checks will verify not only performance but compliance: ensuring that access controls, encryption, and authentication flows behave as designed.

In that future, synthetic monitoring becomes a governance tool as much as an observability one—evidence that every access path, internal or external, performs reliably and securely.

Conclusion

Internal applications are the quiet heartbeat of the enterprise. They don’t show up on public dashboards, but they power payroll, finance, logistics, and operations. When they fail, the business stalls.

Synthetic monitoring extends visibility into those hidden systems. By deploying private agents, managing credentials securely, and modeling real workflows, organizations can measure the performance of SAP, ERP, and other internal applications with the same rigor as public services.

The result isn’t just uptime—it’s assurance. Assurance that the systems no one sees still run the business smoothly.

With Dotcom-Monitor and UserView, enterprises can bring that assurance inside the firewall—combining public and private visibility into one continuous view of performance, reliability, and user experience.