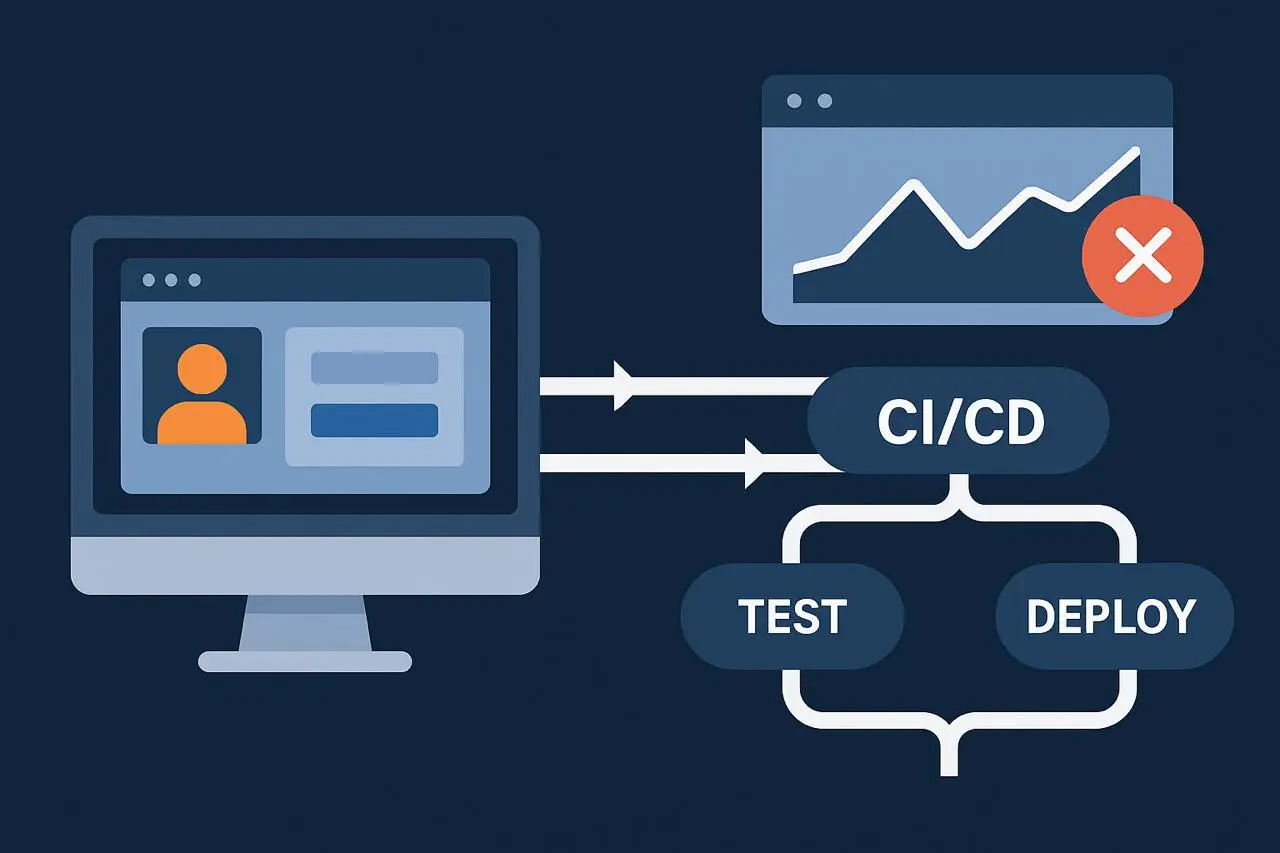

CI/CD pipelines are the heartbeat of modern software delivery. They automate builds, run unit tests, package applications, and deploy them to production with a speed that traditional release cycles could never match. For engineering teams under pressure to move fast, pipelines are the mechanism that makes agility possible.

But pipelines often share the same blind spot: they validate code correctness, not user experience. Unit tests may confirm a function returns the right value, and integration tests may check that APIs respond as expected. Yet those tests rarely simulate what a user actually does. A login screen that hangs on “loading,” a checkout flow that fails because of a broken redirect, or a page that throws an expired TLS certificate—all of these can still sail straight through a CI/CD pipeline and land in production.

That’s where synthetic monitoring comes in. By simulating user journeys in the pipeline itself, you catch issues in the only place that matters: the point where real users interact with your application. It’s not about replacing developer tests, it’s about complementing them with a layer that validates the experience end to end.

What Is Synthetic Monitoring in a CI/CD Context?

Synthetic monitoring runs scripted interactions—a login, a form submission, a purchase—against your application from the outside. Unlike unit tests, which exercise code paths in isolation, synthetic checks behave like real users. They load pages in a browser, follow redirects, and validate responses.

In a CI/CD pipeline, synthetic monitoring becomes a quality gate. Code doesn’t just have to compile—it has to actually work. Pipelines that integrate these tests ensure every release is judged not only on technical correctness, but also on functional reliability and user-facing performance.

Benefits of Integrating Synthetic Monitoring Into CI/CD

CI/CD has become synonymous with speed. Code moves from commit to production in minutes, and pipelines give teams confidence that what they built won’t immediately collapse. But the definition of “done” in most pipelines is still narrow: the code compiles, unit tests pass, integration checks succeed. None of that answers the most important question—will the application work when a real user tries to use it? That’s the gap synthetic monitoring closes.

- Shift-left reliability: Failures are caught before deployment, not by customers.

- Confidence in releases: Pipelines validate user flows, not just backend logic.

- Regression protection: Synthetic checks flag if core features break after changes.

- Faster incident response: A failed test in the pipeline is a faster alert than a tweet from an angry user.

The cumulative effect is more than just catching bugs earlier. With synthetic monitoring built into CI/CD, pipelines evolve from simple automation engines into trust machines. Every build is judged not only on whether it compiles, but on whether it delivers the experience users expect. The end result isn’t just velocity—it’s velocity without fear, the ability to ship quickly and sleep at night knowing customers won’t be the first to discover what went wrong.

Where to Insert Synthetic Monitoring in the Pipeline

Knowing when to run synthetic checks is just as important as knowing how to run them. CI/CD pipelines thrive on speed, and every additional test competes with the clock. If you overload the pipeline with full user journeys at every stage, you risk slowing down delivery to a crawl. On the other hand, if you run too few checks, you miss the very failures synthetic monitoring was meant to catch. The art is in striking a balance—placing checks at the moments where they deliver maximum value with minimal drag.

Pre-Deployment in Staging

Before code ever touches production, synthetic monitoring can simulate business-critical workflows like login or checkout in the staging environment. If these checks fail, the deployment halts immediately. This is your first guardrail—a way to stop bad builds before they affect real users.

Post-Deployment Smoke Tests

The moment a deployment hits production, a second round of synthetic checks should fire. These tests validate that the live environment is healthy, endpoints respond correctly, and critical flows still function. Staging is useful, but only production confirms that nothing broke in translation.

Scheduled Regression Runs

Not every issue reveals itself at deploy time. Drift in dependencies, configuration changes, or external service failures can surface days later. Scheduled synthetic runs—daily, weekly, or aligned with business events—provide a safety net, ensuring workflows continue to work long after code is shipped.

These stages together form a layered defense. Staging checks block regressions early, post-deploy smoke tests confirm success in production, and scheduled runs protect against the slow decay of reliability over time. With synthetic monitoring at these points, your pipeline becomes more than a conveyor belt for code—it becomes a gatekeeper for user experience.

Best Practices for Synthetic Monitoring in CI/CD

Synthetic monitoring can strengthen CI/CD pipelines, but it only delivers real value when it’s approached deliberately. Slapping a scripted login onto a build job might work once or twice, but without discipline those tests quickly become flaky, slow, or irrelevant. The goal isn’t to run the most checks possible—it’s to run the right checks in the right way so that pipelines remain fast while still protecting user experience.

1. Start Small

Focus on one or two workflows that are critical to the business, like authentication or checkout. These flows carry the most risk if broken and provide the most benefit if validated early. Once those are reliable, expand to secondary journeys.

2. Script Resiliently

CI/CD depends on consistency, but frontends often change rapidly. Use selectors and waits that can handle dynamic IDs, loading delays, or A/B tests. A resilient script separates genuine failures from cosmetic changes, keeping your pipeline trustworthy.

3. Keep Checks Fast

Synthetic monitoring doesn’t have to mirror full regression suites. In the pipeline, keep tests light—simple smoke flows that run in seconds. Reserve deeper multi-step scenarios for scheduled monitoring outside the release path.

4. Use Dedicated Accounts

Production data should never be polluted by test traffic. Dedicated accounts, ideally scoped to test environments or flagged in production, prevent synthetic monitoring from creating noise or triggering false business activity.

5. Define Clear Policies

Not every failure should block a release. Decide in advance which synthetic checks are “gates” and which are “warnings.” That distinction prevents alert fatigue and ensures engineers treat failed checks with the seriousness they deserve.

Handled with this level of care, synthetic monitoring becomes more than a safety net. It turns pipelines into guardrails that enforce quality without slowing down the team. Instead of being a bottleneck, it becomes the mechanism that allows fast releases to also be safe releases.

Common Monitoring Challenges and How to Solve Them

Synthetic monitoring in CI/CD looks simple on paper: write a script, drop it into the pipeline, and let it run. In practice, the reality is messier. Applications evolve, environments drift, and pipelines are sensitive to noise. Without forethought, monitoring can turn from a safeguard into a distraction. Recognizing the pitfalls early, and planning for them, keeps synthetic checks useful instead of brittle.

- Flaky Tests — Scripts fail not because the app is broken, but because a dynamic element changed or a page loaded slowly. The fix is disciplined scripting: stable selectors, explicit waits, and resilient flows.

- Environment Drift — Staging rarely mirrors production perfectly. Config mismatches, missing data, or dependency differences can produce misleading results. Running post-deploy smoke tests in production is the only true safeguard.

- Alert Fatigue — Too many probes or overly sensitive thresholds overwhelm teams with noise. Focus checks on critical user journeys, tune thresholds, and funnel results into meaningful dashboards.

- Integration Overhead — If monitoring tools don’t plug smoothly into CI/CD, engineers will avoid them. Favor platforms with APIs, CLI hooks, or native plugins so checks feel like part of the pipeline, not a bolt-on.

- Cost Awareness — Full browser checks on every commit add time and expense. Balance coverage by keeping pipeline checks light and scheduling deeper journeys at slower cadences.

Success here depends as much on process and tooling as on the idea itself. When tests are resilient, environments aligned, and signals prioritized, synthetic monitoring strengthens the pipeline instead of weighing it down—protecting both speed and reliability in equal measure.

Synthetic Monitoring Tools for CI/CD Pipelines

Choosing the right tool can make or break the value of synthetic monitoring in a pipeline. CI/CD thrives on automation, which means your monitoring platform has to be scriptable, stable, and easy to integrate. A good tool adds confidence without slowing builds down, while a poor choice creates flaky tests, failed integrations, and wasted engineering cycles. In practice, teams should prioritize platforms that support complex user journeys, expose automation-friendly APIs, and integrate smoothly with the CI/CD systems they already rely on.

Dotcom-Monitor

Dotcom-Monitor leads here with the EveryStep Web Recorder, which allows teams to script multi-step browser interactions without deep coding expertise. Combined with APIs and flexible integration points, synthetic checks can be dropped directly into Jenkins, GitHub Actions, GitLab, or Azure DevOps pipelines. By blending simplicity with enterprise-grade capability, Dotcom-Monitor validates critical workflows automatically on every release.

Selenium WebDriver

An open-source staple, Selenium provides full control over browsers for scripted tests. It integrates with nearly every CI/CD system, making it ideal for teams that want maximum customization. The tradeoff is maintenance overhead—scripts must be managed and kept resilient against UI changes.

Puppeteer

Built on top of Chrome’s DevTools protocol, Puppeteer gives developers a fast, scriptable way to run headless or full-browser checks. It’s particularly well-suited for validating single-page applications. Lightweight and developer-friendly, it pairs well with CI/CD pipelines for targeted synthetic flows.

Playwright

Playwright, maintained by Microsoft, extends the browser automation model to multiple engines (Chromium, Firefox, WebKit). Its parallelism support and resilience features reduce flakiness, making it a strong option for teams that need cross-browser confidence in their pipelines.

cURL and Shell Scripts

For simple API-level checks, lightweight shell scripts using cURL can be surprisingly effective. They don’t replace browser-level workflows, but they are fast, reliable, and easy to wire into any pipeline as a first line of defense.

Together, these tools cover the spectrum—from enterprise-ready monitoring platforms like Dotcom-Monitor to open-source frameworks that developers can tailor to their environments. The right fit depends on whether your team values ease of use, depth of features, or full control over scripting and infrastructure.

When tooling works the way it should, synthetic monitoring fades into the background. Pipelines run, checks validate, and the only time you hear about it is when something truly breaks. That’s the goal: not more noise, but more certainty that every release reaching production delivers the experience your users expect.

Real-World Example: Synthetic Monitoring in Action

Picture a typical workflow: a developer pushes code, the pipeline builds, unit tests pass, and the app deploys to staging. At this point, synthetic checks simulate a login and a purchase. If either fails, the pipeline stops, sparing users from broken functionality.

Once the build passes staging, it deploys to production. Synthetic smoke tests immediately run against live endpoints, confirming that everything works. Later that night, scheduled synthetic checks validate workflows again, ensuring stability beyond deployment.

In this scenario, the pipeline doesn’t just automate delivery, but instead it actively safeguards the user experience.

The Future of Synthetic Monitoring in CI/CD

As pipelines evolve, synthetic monitoring will evolve too. Expect to see tighter integration with observability platforms, AI-assisted triage to separate transient failures from true blockers, and expanded coverage into microservices, GraphQL endpoints, and mobile applications.

What won’t change is the core principle: synthetic monitoring brings the user’s perspective into the pipeline. It ensures that speed and reliability move forward together, not in conflict.

Conclusion

CI/CD pipelines have solved the problem of speed. But speed alone can be dangerous when broken user experiences slip through unchecked. Synthetic monitoring closes the gap, embedding user-centric validation directly into the release process.

The formula is simple: run checks in staging before deploy, validate production right after release, and schedule regression runs for ongoing confidence. Pair this with a toolset that integrates cleanly into your pipeline, and synthetic monitoring becomes a natural extension of CI/CD.

In the end, it’s not just about shipping fast—it’s about shipping code that works. Dotcom-Monitor helps teams get there by combining flexible scripting, API and browser tests, and seamless CI/CD integration. With the right tooling in place, every release can be both rapid and reliable.