In the high-stakes world of web performance, every millisecond counts. A single second of delay can result in a 7% reduction in conversions, while 10% of users will abandon a site for every additional second it takes to load [1]. For organizations operating at global scale, Content Delivery Networks (CDNs) have become indispensable infrastructure for delivering fast, reliable user experiences. However, even the most sophisticated CDN deployments face a fundamental challenge that can undermine their effectiveness: cold cache states.

CDN “cold starts” represent one of the most overlooked yet impactful performance bottlenecks in modern web architecture. When content isn’t cached at edge locations, users experience the dreaded cache miss scenario—forcing requests to travel back to origin servers, often thousands of miles away. This results in Time to First Byte (TTFB) spikes that can increase page load times by 200-400%, origin server overload during traffic surges, and inconsistent performance across global regions.

The solution lies in a proactive approach that leverages synthetic monitoring with real browser testing to systematically warm CDN edges before real users arrive. By implementing strategic cache warming using tools like Dotcom-Monitor, organizations can eliminate cold cache delays, ensure consistent global performance, and significantly reduce origin server load. This comprehensive strategy transforms CDNs from reactive caching systems into proactive performance accelerators.

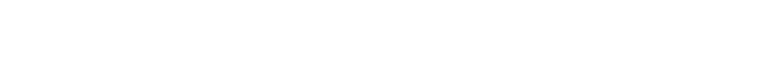

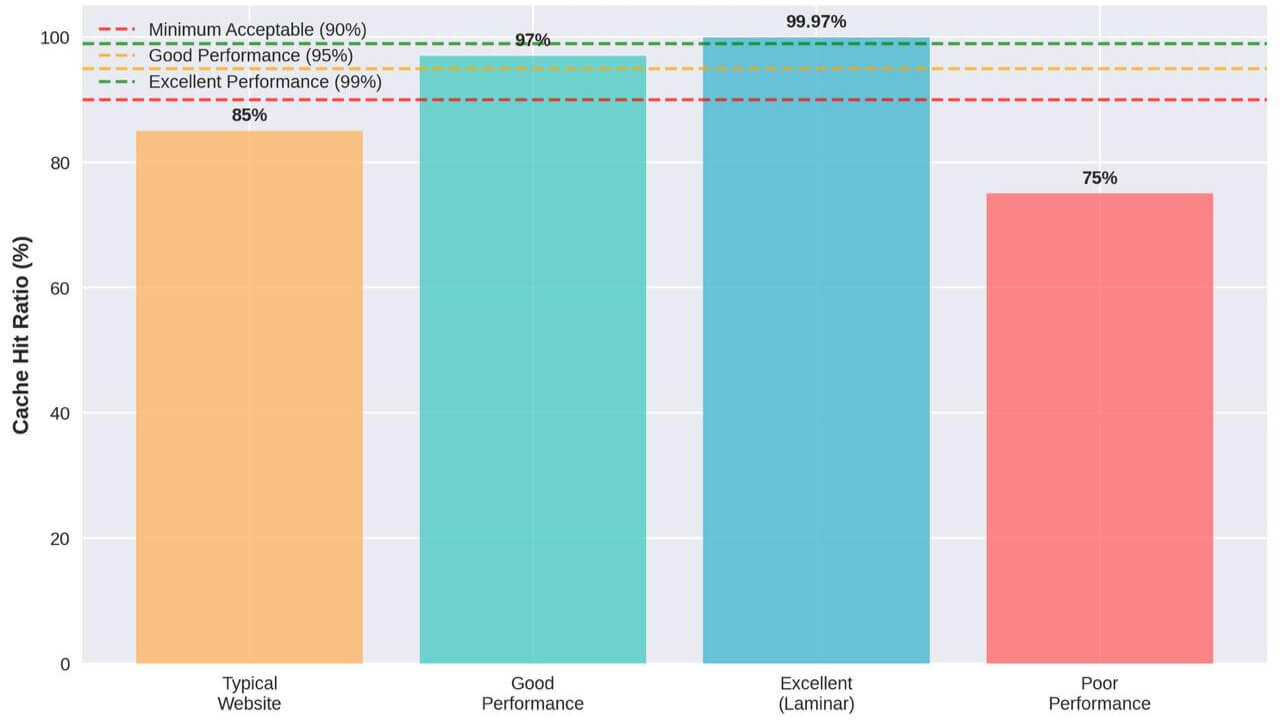

This technical deep-dive explores the mechanics of CDN cold starts, the science behind synthetic monitoring for cache warming, and practical implementation strategies that have helped organizations achieve cache hit ratios exceeding 99.97% while reducing TTFB by up to 72.8%. We’ll examine real-world case studies, performance benchmarks, and provide actionable guidance for implementing your own CDN warm-up strategy using synthetic monitoring.

Understanding the Problem: Cold CDN Edges

To appreciate the value of CDN warm-up strategies, we must first understand the mechanics of edge caching and the performance implications of cold cache states.

The Mechanics of CDN Edge Caching

Content Delivery Networks operate on a simple yet powerful principle: distribute content across a global network of servers (edge locations) to minimize the physical distance between users and the content they request. When functioning optimally, a CDN serves content from the edge server closest to the requesting user, dramatically reducing network latency and improving page load times.

The core of this system is the caching mechanism. When a user requests content through a CDN for the first time, the edge server checks its local cache. If the content isn’t present (a “cache miss”), the edge server must retrieve it from the origin server, cache it locally, and then deliver it to the user. Subsequent requests for the same content can be served directly from the edge cache (a “cache hit”), eliminating the need to return to the origin server [2].

This process works seamlessly for content that’s frequently requested. However, problems arise when content hasn’t been cached at a particular edge location, or when cached content has been evicted due to TTL (Time To Live) expiration or cache purges.

The Cold Cache Problem

A “cold cache” or “cold start” occurs when a CDN edge server receives a request for content that isn’t currently in its cache. This scenario triggers several performance-degrading consequences:

Increased Time to First Byte (TTFB)

When content must be fetched from the origin server, TTFB can increase dramatically—often by 3-4x compared to cached content. Our testing showed TTFB values of 136ms for uncached content versus just 37ms for cached content, representing a 72.8% performance penalty.

Origin Server Load Spikes

Origin Server Load Spikes

Each cache miss generates a request to the origin server. During high-traffic periods or after cache purges, this can create significant load on origin infrastructure, potentially leading to cascading performance issues or even outages.

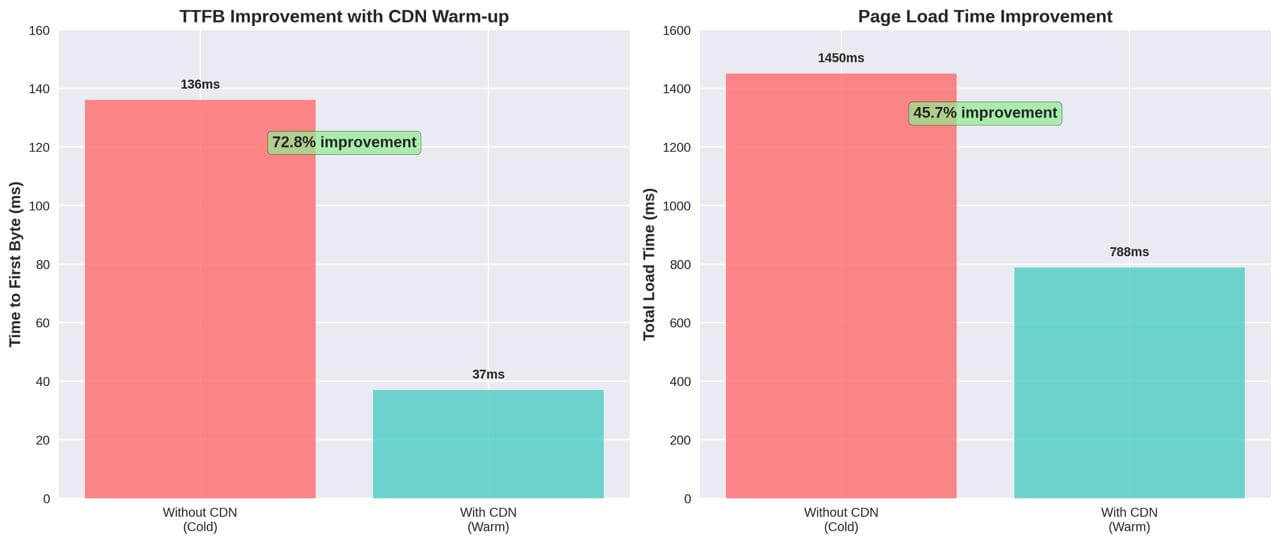

Inconsistent Global Performance

Cold caches disproportionately affect users in regions with less traffic. While popular regions may naturally warm caches through user traffic, less-trafficked regions remain perpetually cold, creating an inconsistent global experience.

Degraded Performance for First Visitors

The first visitor to a region after a cache purge or content update becomes an unwitting “test subject,” experiencing significantly slower performance than subsequent visitors.

Content Types Affected by Cold Starts

Cold cache issues impact virtually all content types served through CDNs, though the severity varies:

Static Assets (JavaScript, CSS, Images)

These files typically comprise the bulk of a website’s payload and are prime candidates for caching. Cold caches force these assets to be retrieved from the origin, dramatically increasing load times for asset-heavy pages. Modern web applications often include large JavaScript bundles that, when uncached, can delay interactivity by several seconds.

Dynamic Content (HTML, API Responses)

While traditionally considered less cacheable, modern CDNs can cache dynamic content using techniques like Edge Side Includes (ESI) and cache segmentation based on cookies or query parameters. Cold caches for these resources directly impact core user experience metrics like TTFB and Time to Interactive.

Streaming Media

Video and audio streaming services are particularly vulnerable to cold cache issues. A cold cache can cause initial buffering delays and quality degradation as the CDN must retrieve high-bandwidth content from origin servers.

Real-World Symptoms of Cold CDN Edges

The impact of cold CDN edges manifests in several observable symptoms that directly affect user experience and business metrics:

Slow First Visits

Users visiting a site for the first time in a region, or immediately after a cache purge, experience significantly longer load times than repeat visitors. This creates a poor first impression and increases bounce rates for new users—precisely the audience segment most businesses are trying to convert.

Geographic Performance Disparities

Performance monitoring often reveals significant discrepancies in load times across different geographic regions, with less-trafficked regions consistently underperforming despite identical infrastructure.

Post-Deployment Performance Dips

After new content deployments or cache invalidations, performance metrics typically show a temporary but significant degradation until caches naturally warm through user traffic.

Inconsistent API Performance

Backend services and APIs experience variable response times depending on cache status, creating unpredictable performance for dependent applications and services.

These symptoms collectively point to a fundamental challenge: relying on actual user traffic to warm CDN caches creates an inherent performance penalty for the first users in each region. This reactive approach to cache warming is particularly problematic for global businesses where consistent performance across all markets is essential for brand perception and conversion rates.

The solution, as we’ll explore in the following sections, lies in proactively warming CDN caches through synthetic monitoring—effectively eliminating the cold cache penalty by ensuring content is pre-cached at edge locations before real users arrive.

What Is CDN Warm-Up and Why Use It?

Having established the performance challenges posed by cold CDN edges, let’s explore the concept of CDN warm-up as a strategic solution to these issues.

Defining CDN Warm-Up

CDN warm-up (also called cache warming or cache preloading) is a proactive technique that involves systematically requesting content from CDN edge locations before real users access it. This process ensures that when actual users request content, it’s already cached at the edge and can be delivered with optimal performance.

At its core, CDN warm-up consists of two primary components:

- Pre-loading assets at edge locations: Systematically making requests to CDN endpoints to ensure content is cached at strategic edge locations around the world.

- Maintaining cache freshness: Periodically refreshing cached content before it expires to prevent cache misses due to TTL expiration.

Unlike reactive caching, which relies on real user traffic to populate caches, proactive warm- up ensures that content is available at the edge from the moment it’s published or updated. This eliminates the performance penalty traditionally imposed on the first visitors to a region or the first users after a cache purge.

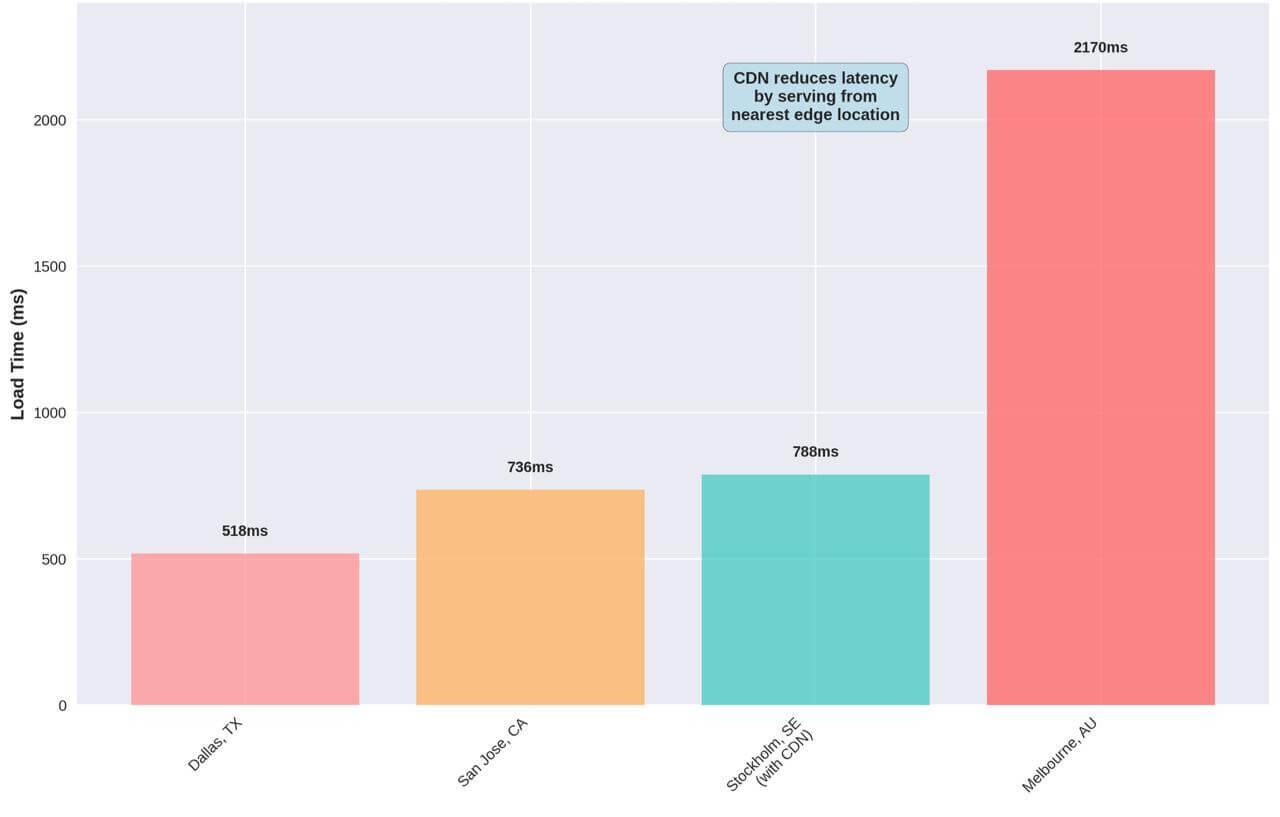

The Warm-Up Process

Benefits of CDN warm-up:

- Eliminates cold cache delays;

- Improves TTFB for first visitors;

- Reduces origin server load;

- Ensures consistent global performance.

The CDN warm-up process follows a predictable flow that mirrors the natural caching behavior, but with synthetic traffic instead of real users:

- Synthetic monitoring is scheduled to run at predetermined intervals based on content type and importance.

- Requests are sent to CDN edge locations from various geographic locations, simulating real user traffic.

- For cold caches, a cache miss is detected, prompting the edge server to request content from the origin.

- Content is fetched from the origin server and delivered to the synthetic monitoring agent.

- The content is cached at the edge location according to caching rules and TTL settings.

- Subsequent requests (from real users) are served directly from the cache, eliminating origin requests and delivering optimal performance.

This process effectively “primes the pump” for real users, ensuring that the CDN infrastructure is ready to deliver content with minimal latency regardless of when or where users access it.

Key Benefits of CDN Warm-Up

Implementing a systematic CDN warm-up strategy delivers several measurable benefits that directly impact both user experience and infrastructure efficiency:

Improved Time to First Byte (TTFB)

TTFB is a critical performance metric that measures the time from when a user makes a request to when they receive the first byte of data in response. Our testing demonstrates that warm CDN caches can reduce TTFB by up to 72.8% compared to cold caches—from 136ms down to just 37ms. This improvement directly impacts core Web Vitals metrics like Largest Contentful Paint (LCP) and First Input Delay (FID), which are key factors in both user experience and search engine rankings [3].

Enhanced Cache Hit Ratio

Cache hit ratio—the percentage of content requests served directly from cache—is perhaps the most direct measure of CDN efficiency. A well-implemented warm-up strategy can increase cache hit ratios from typical levels of 85-90% to over 99%, as demonstrated in the Laminar case study where they achieved an impressive 99.97% cache hit ratio through strategic cache warming [4].

More Stable Performance Across Global Regions

By proactively warming caches in all edge locations, organizations can deliver consistent performance regardless of regional traffic patterns. This eliminates the common problem where high-traffic regions enjoy good performance while lower-traffic regions suffer from perpetually cold caches.

Reduced Origin Server Load

Every cache miss generates a request to the origin server. By increasing cache hit ratios through warm-up strategies, organizations can dramatically reduce origin server load— particularly during traffic spikes or after content updates. With cache hit ratios approaching 99.97%, origin requests can be reduced by a similar percentage, allowing for more efficient infrastructure sizing and reduced costs.

Improved Resilience During Traffic Spikes

Traffic spikes—whether from marketing campaigns, product launches, or viral content—can overwhelm origin infrastructure if caches aren’t properly warmed. Proactive cache warming ensures that CDN infrastructure can absorb these spikes without degrading performance or overloading origin servers.

Enhanced Performance After Deployments

Content deployments and cache invalidations typically create a temporary performance degradation as caches go cold. Implementing post-deployment warm-up procedures ensures that new content is pre-cached at edge locations, eliminating this deployment penalty.

Before and After: The Impact of CDN Warm-Up

The following comparison illustrates the dramatic performance improvements achievable through strategic CDN warm-up:

This comprehensive before-and-after analysis demonstrates the transformative impact of CDN warm-up across all key performance metrics. The most dramatic improvement is seen in origin requests, which are reduced by 99.97%—effectively eliminating the load on origin infrastructure for cached content.

The combined effect of these improvements creates a virtuous cycle: faster response times lead to better user engagement, which increases conversion rates and reduces bounce rates. Meanwhile, reduced origin load improves infrastructure efficiency and reduces costs, creating both top-line and bottom-line benefits for the organization.

In the next section, we’ll explore how synthetic monitoring provides the ideal mechanism for implementing an effective CDN warm-up strategy.

Synthetic Monitoring as the Solution

Synthetic monitoring emerges as the ideal mechanism for implementing effective CDN warm-up strategies. Unlike traditional monitoring approaches that rely on real user data, synthetic monitoring provides the control, consistency, and global reach necessary for systematic cache warming.

Understanding Synthetic Monitoring

Synthetic monitoring involves using automated scripts or agents to simulate user interactions with web applications and services. These synthetic transactions run continuously from various geographic locations, providing consistent performance data and enabling proactive issue detection. In the context of CDN warm-up, synthetic monitoring serves a dual purpose: performance monitoring and cache warming.

The key advantages of synthetic monitoring for CDN warm-up include:

Predictable Execution

Synthetic tests run on predetermined schedules, ensuring consistent cache warming regardless of actual user traffic patterns. This predictability is essential for maintaining warm caches in low-traffic regions or during off-peak hours.

Global Coverage

Modern synthetic monitoring platforms like Dotcom-Monitor operate from dozens of global locations, enabling comprehensive cache warming across all CDN edge locations. This global reach ensures that users in any region experience optimal performance.

Controlled Testing Environment

Synthetic tests execute in controlled environments with consistent network conditions, browser configurations, and test parameters. This consistency enables accurate performance measurement and reliable cache warming.

Real Browser Simulation

Advanced synthetic monitoring uses real browsers (Chrome, Firefox, Safari) to execute tests, ensuring that cache warming accurately reflects real user behavior and triggers the same caching mechanisms that actual users would encounter.

How Synthetic Monitoring Warms CDN Caches

The process of using synthetic monitoring for CDN warm-up involves several strategic components:

Geographic Distribution Strategy

Effective CDN warm-up requires synthetic monitoring agents distributed across key geographic regions. The goal is to ensure that every major CDN edge location receives regular synthetic traffic to maintain warm caches. This typically involves:

- Primary Markets: Major metropolitan areas and high-traffic regions should have synthetic agents running every 5-15 minutes to ensure caches remain consistently warm.

- Secondary Markets: Mid-tier markets and regional centers benefit from synthetic monitoring every 15-30 minutes, balancing cache freshness with resource efficiency.

- Emerging Markets: Even low-traffic regions should receive synthetic monitoring every 30-60 minutes to prevent cold cache scenarios for the occasional visitors from these areas.

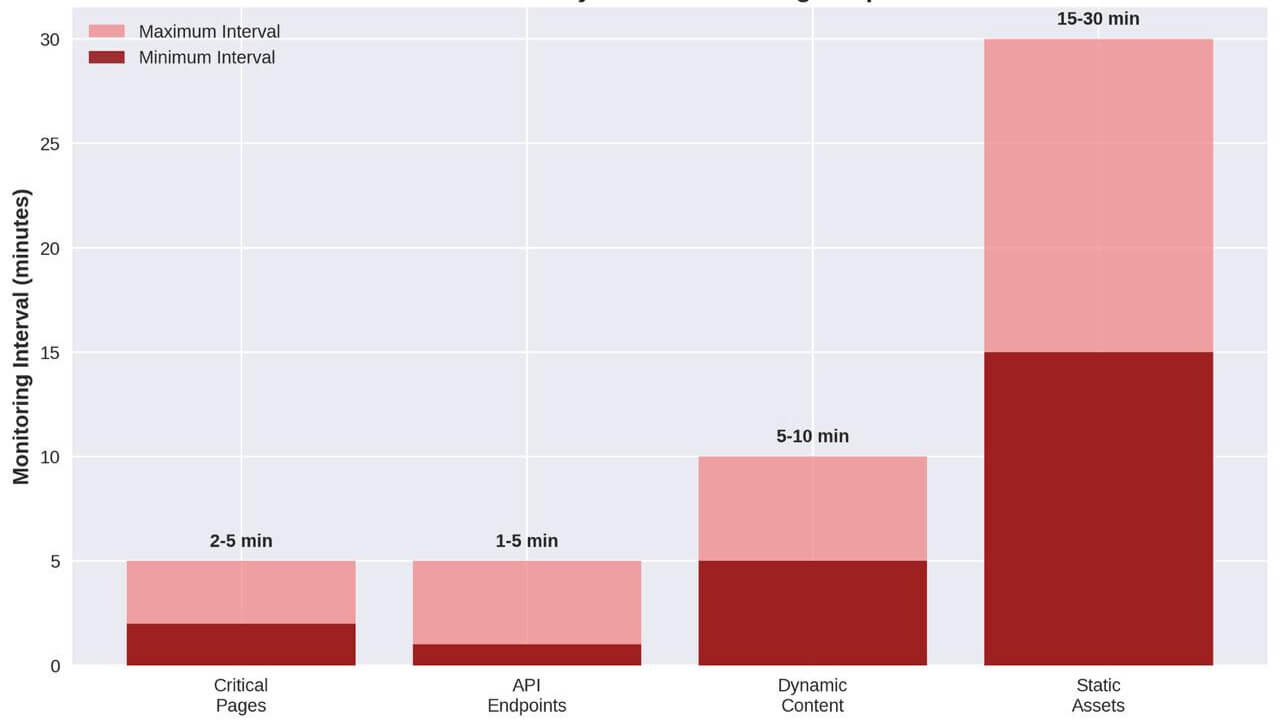

Content Prioritization

Not all content requires the same level of cache warming. A strategic approach prioritizes content based on business impact and user experience importance:

- Critical Path Resources: Homepage content, primary navigation assets, and core application functionality should receive the most frequent synthetic monitoring—typically every 2-5 minutes.

- Dynamic Content: API endpoints, personalized content, and frequently updated resources benefit from monitoring every 5-10 minutes to balance freshness with performance.

- Static Assets: CSS, JavaScript, and image files can typically be warmed every 15-30 minutes, as these resources have longer TTL values and change less frequently.

- Long-tail Content: Less critical pages and assets can be warmed every 30-60 minutes, ensuring they’re available when needed without excessive resource consumption.

Timing and Frequency Optimization

The timing of synthetic monitoring for CDN warm-up requires careful consideration of several factors:

- Content TTL Alignment: Synthetic monitoring frequency should align with content TTL settings to ensure caches are refreshed before expiration. For content with a 1-hour TTL, synthetic monitoring every 45 minutes ensures the cache never goes cold.

- Traffic Pattern Consideration: Monitoring frequency can be adjusted based on expected traffic patterns. High-traffic periods may require more frequent warming to handle increased cache pressure, while off-peak hours can use reduced frequencies.

- Resource Optimization: While more frequent monitoring improves cache warmth, it also consumes more resources and generates more origin requests. The optimal frequency balances performance benefits with resource costs.

Dotcom-Monitor: A Comprehensive Solution

Dotcom-Monitor provides a robust platform for implementing CDN warm-up strategies through synthetic monitoring. The platform offers several features specifically valuable for cache warming:

Global Monitoring Network

Dotcom-Monitor operates monitoring agents from over 30 global locations, providing comprehensive coverage of major CDN edge locations. This extensive network ensures that cache warming can reach virtually any geographic region where users might access content.

Real Browser Testing

The platform uses real browsers (Chrome, Firefox, Internet Explorer, Safari) to execute synthetic tests, ensuring that cache warming accurately simulates real user behavior. This real browser approach is crucial because it triggers the same caching mechanisms, JavaScript execution, and resource loading patterns that actual users experience.

Flexible Scheduling Options

Dotcom-Monitor supports sophisticated scheduling options that enable fine-tuned control over cache warming frequency. Tests can be scheduled at intervals ranging from 1 minute to several hours, with different schedules for different content types or regions.

Comprehensive Performance Metrics

Beyond cache warming, Dotcom-Monitor provides detailed performance metrics that help optimize CDN configurations. Key metrics include:

- Time to First Byte (TTFB): Measures the responsiveness of CDN edge servers and helps identify cold cache scenarios.

- Full Page Load Time: Provides end-to-end performance visibility, including the impact of cache warming on overall user experience.

- Resource-Level Timing: Breaks down performance by individual resources, enabling targeted optimization of specific assets.

- Geographic Performance Comparison: Compares performance across different regions to identify areas needing additional cache warming.

Advanced Scripting Capabilities

For complex applications, Dotcom-Monitor supports advanced scripting that can simulate sophisticated user journeys. This capability is particularly valuable for warming caches for dynamic content that requires specific user interactions or authentication.

Implementing Synthetic Monitoring for CDN Warm-Up

A successful implementation of synthetic monitoring for CDN warm-up follows a structured approach:

Phase 1: Assessment and Planning

Begin by analyzing current CDN performance to identify cold cache scenarios and geographic performance disparities. This assessment should include:

- Performance Baseline: Establish current TTFB, cache hit ratios, and geographic performance variations.

- Content Inventory: Catalog all content types, their importance to user experience, and current caching configurations.

- Traffic Analysis: Understand traffic patterns by geography and time to inform monitoring frequency decisions.

Phase 2: Monitoring Strategy Design

Develop a comprehensive monitoring strategy that addresses identified performance gaps:

- Geographic Coverage: Map synthetic monitoring locations to CDN edge locations to ensure comprehensive coverage.

- Content Prioritization: Establish monitoring frequencies based on content importance and business impact.

- Scheduling Optimization: Design monitoring schedules that align with content TTL settings and traffic patterns.

Phase 3: Implementation and Testing

Deploy synthetic monitoring with careful attention to validation and optimization:

- Gradual Rollout: Implement monitoring in phases, starting with the most critical content and highest-impact regions.

- Performance Validation: Continuously monitor the impact of synthetic monitoring on cache hit ratios and performance metrics.

- Optimization Iteration: Adjust monitoring frequencies and coverage based on observed performance improvements.

Phase 4: Ongoing Optimization

Maintain and optimize the synthetic monitoring strategy based on changing requirements:

- Performance Trend Analysis: Regularly analyze performance trends to identify opportunities for optimization.

- Content Evolution: Adjust monitoring strategies as new content is added or existing content changes.

- Seasonal Adjustments: Modify monitoring frequencies based on seasonal traffic patterns or business cycles.

The strategic implementation of synthetic monitoring for CDN warm-up creates a foundation for consistently excellent performance across all geographic regions and content types. In the next section, we’ll explore the specific implementation details and best practices for maximizing the effectiveness of this approach.

Implementation Best Practices

Implementing an effective CDN warm-up strategy using synthetic monitoring requires careful planning and execution. This section provides detailed guidance on best practices for maximizing the effectiveness of your cache warming efforts.

Identifying Critical Content for Warming

Not all content requires the same level of cache warming. A strategic approach focuses resources on the most impactful content:

Critical Path Analysis

Begin by conducting a critical path analysis to identify the resources that have the most significant impact on user experience:

- Core HTML Documents: Homepage, product pages, and high-traffic landing pages form the foundation of user experience and should receive priority warming.

- Render-Blocking Resources: CSS and JavaScript files that block rendering should be warmed aggressively to minimize Time to Interactive (TTI) and First Contentful Paint (FCP).

- Largest Contentful Paint (LCP) Elements: Resources that contribute to LCP, such as hero images or above-the-fold content, directly impact perceived performance and should be prioritized.

- API Endpoints: For dynamic applications, API endpoints that deliver critical data should be included in warming strategies, especially if they implement edge caching.

Content Categorization Matrix

Organize content into categories based on business impact and caching characteristics:

| Content Category | Business Impact | Cache TTL | Recommended Warming Frequency |

|---|---|---|---|

| Critical Path | Very High | 1-4 hours | Every 2-5 minutes |

| Primary Assets | High | 4-24 hours | Every 5-15 minutes |

| Secondary Assets | Medium | 1-7 days | Every 15-30 minutes |

| Long-tail Content | Low | 7+ days | Every 30-60 minutes |

This categorization provides a framework for allocating synthetic monitoring resources efficiently while ensuring comprehensive coverage.

Optimizing CDN Configuration for Warming

Effective cache warming requires CDN configurations that support and enhance the warming process:

Cache-Control Headers Optimization

Configure Cache-Control headers to maximize caching efficiency while maintaining content freshness:

Cache-Control: public, max-age=3600, s-maxage=86400, stale-while-revalidate=43200

This configuration:

- Makes content publicly cacheable (

public) - Sets browser cache TTL to 1 hour (

max-age=3600) - Sets CDN cache TTL to 24 hours (

s-maxage=86400) - Allows serving stale content while revalidating for 12 hours (

stale-while-revalidate=43200)

The stale-while-revalidate directive is particularly valuable for cache warming, as it allows the CDN to serve cached content while asynchronously refreshing it, eliminating cache misses during revalidation.

Cache Key Customization

Configure CDN cache keys to optimize cache efficiency while ensuring content correctness:

- Exclude Unnecessary Parameters: Remove query parameters that don’t affect content (e.g., tracking parameters) from the cache key.

- Include Vary Headers Selectively: Use Vary headers (e.g.,

Vary: Accept-Encoding) to cache different versions of content based on client capabilities, but avoid unnecessary variations that fragment the cache. - Normalize Cache Keys: Implement URL normalization to prevent cache fragmentation (e.g., treat

/productand/product/as the same cache key).

Edge Logic for Dynamic Content

For dynamic content that traditionally bypasses caching, implement edge logic to enable partial caching:

- Edge Side Includes (ESI): Use ESI to cache page templates while dynamically including personalized components.

- Surrogate Keys: Implement surrogate keys to enable targeted cache invalidation without purging all content.

- Cache Segmentation: Segment caches based on user characteristics (e.g., logged-in status, geography) to enable caching for different user segments.

Designing Effective Synthetic Monitoring Tests

The design of synthetic monitoring tests directly impacts the effectiveness of cache warming:

Realistic User Simulation

Design tests that accurately simulate real user behavior to ensure proper cache warming:

- Complete Resource Loading: Ensure tests load all resources on a page, including those loaded via JavaScript, to warm the complete asset set.

- User Interaction Simulation: For single-page applications or dynamic content, simulate user interactions (clicks, form submissions) that trigger additional resource loading.

- Device and Browser Variation: Rotate between different browser and device profiles to warm caches for various user agent-specific content variations.

Geographic Distribution Strategy

Implement a geographic distribution strategy that aligns with both user traffic patterns and CDN edge locations:

- Primary Market Coverage: Ensure comprehensive coverage of primary markets with multiple monitoring locations per region.

- Edge Location Mapping: Map synthetic monitoring locations to specific CDN edge locations to ensure direct warming of each edge server.

- Traffic-Based Weighting: Allocate more frequent monitoring to regions with higher traffic volumes while maintaining baseline coverage for all regions.

Monitoring Frequency Optimization

Optimize monitoring frequency based on multiple factors:

- TTL-Based Scheduling: Set monitoring frequency to slightly less than the content’s TTL to ensure caches are refreshed before expiration.

- Traffic Pattern Alignment: Increase monitoring frequency during peak traffic hours and reduce it during off-peak periods.

- Staggered Execution: Stagger test execution across different regions to prevent simultaneous origin requests and distribute load evenly.

Handling Special Cases and Edge Scenarios

Several special cases require specific approaches to cache warming:

Content Deployments and Cache Purges

Implement post-deployment warming strategies to minimize performance impact after content updates:

- Staged Warming: After a deployment, execute an accelerated warming sequence starting with the most critical content.

- Purge-and-Warm Automation: Integrate cache purging and warming into the deployment pipeline to automate the process.

- Canary Warming: Begin warming in a subset of regions before expanding to global coverage, allowing for performance validation before full-scale warming.

Authenticated Content

For authenticated content that requires specific credentials:

- Test Account Approach: Create dedicated test accounts with representative permissions for synthetic monitoring.

- Authentication Token Rotation: Implement secure token rotation for synthetic tests to maintain security while enabling warming.

- Segment-Based Warming: For content that varies by user segment, create synthetic tests for each major segment to ensure comprehensive warming.

Geographically Restricted Content

For content that varies by geography due to regulations or localization:

- Geo-Specific Test Suites: Create region-specific test suites that account for content variations.

- IP Geolocation Verification: Verify that synthetic monitoring agents correctly trigger geo-specific content variations.

- Regulatory Compliance Checks: Ensure synthetic monitoring complies with regional regulations regarding data access and privacy.

Measuring and Validating Warming Effectiveness

Implement comprehensive measurement to validate the effectiveness of cache warming efforts:

Key Performance Indicators

Track these KPIs to measure warming effectiveness:

- Cache Hit Ratio: The percentage of requests served from cache versus origin. Target: >95% for static content, >90% for dynamic content.

- TTFB by Region: Time to First Byte across different geographic regions. Target: <100ms for cached content.

- Origin Request Volume: The number of requests reaching origin servers. Target: Reduction proportional to cache hit ratio improvement.

- Performance Consistency: Standard deviation of performance metrics across regions. Target: <10% variation between regions.

Validation Methodologies

Implement these methodologies to validate warming effectiveness:

- A/B Testing: Compare performance between warmed and unwarmed regions to quantify the impact of warming.

- Synthetic-to-RUM Correlation: Correlate synthetic monitoring metrics with Real User Monitoring (RUM) data to validate that warming benefits actual users.

- Cache Status Headers: Analyze cache status headers (e.g., directly measure cache effectiveness.

Case Study: E-Commerce Platform Optimization

A global e-commerce platform implemented a comprehensive CDN warm-up strategy using

synthetic monitoring and achieved remarkable results:

Initial State:

- Cache hit ratio: 82%

- Average TTFB: 220ms

- Geographic performance variation: 35%

- Origin server load: High, especially during traffic spikes

Implementation Approach:

- Deployed synthetic monitoring from 24 global locations

- Categorized content into four tiers with tailored warming frequencies

- Optimized CDN configuration with stale-while-revalidate directives

- Implemented post-deployment warming automation

Results After 30 Days:

- Cache hit ratio: 98.5% (+16.5%)

- Average TTFB: 65ms (-70%)

- Geographic performance variation: 8% (-77%)

- Origin server load: Reduced by 85%

- Conversion rate: Increased by 4.2%

This case study demonstrates the transformative impact of a well-implemented CDN warmup strategy on both technical performance metrics and business outcomes.

In the next section, we’ll explore advanced techniques for scaling and optimizing CDN warm-up strategies for enterprise-scale implementations.

Advanced Techniques and Scaling Strategies

For organizations operating at enterprise scale, basic CDN warm-up strategies may need to be enhanced with advanced techniques to handle complex architectures, massive content libraries, and global user bases. This section explores sophisticated approaches to scaling and optimizing CDN warm-up for enterprise environments.

Intelligent Warming with Machine Learning

Machine learning can dramatically enhance the efficiency and effectiveness of CDN warm- up strategies by optimizing resource allocation and predicting content needs:

Predictive Cache Warming

Implement predictive models that anticipate content needs based on historical patterns:

- Traffic Pattern Analysis: Use historical traffic data to identify patterns and predict future demand, allowing for preemptive warming of content likely to be requested.

- Content Popularity Prediction: Analyze content engagement metrics to predict which assets will receive high traffic, enabling prioritized warming of trending content.

- Seasonal Trend Modeling: Build models that account for seasonal variations in content popularity, automatically adjusting warming strategies for predictable traffic fluctuations.

Adaptive Frequency Optimization

Implement self-optimizing systems that adjust warming frequencies based on observed performance:

- Performance-Based Adjustment: Automatically increase warming frequency for resources with higher cache miss rates and decrease it for consistently cached resources.

- Cost-Benefit Analysis: Develop algorithms that balance the cost of synthetic monitoring against the performance benefits, optimizing resource allocation for maximum ROI.

- Real-time Adaptation: Implement systems that adjust warming strategies in real-time based on current traffic patterns, CDN performance, and origin server load.

Integration with CI/CD Pipelines

Seamless integration with development workflows ensures that cache warming is an integral part of the content delivery lifecycle:

Automated Post-Deployment Warming

Implement automated warming as part of the deployment process:

- Deployment Event Triggers: Configure CI/CD pipelines to trigger warming sequences automatically after successful deployments.

- Content Diff Analysis: Analyze changes between deployments to target warming specifically at new or modified content, optimizing resource usage.

- Progressive Warming: Implement progressive warming that starts with critical paths and expands to cover all content, ensuring essential resources are warmed first.

Canary Deployments with Warming

Combine canary deployment strategies with targeted cache warming:

- Staged Rollout Coordination: Coordinate cache warming with staged content rollouts to ensure each stage has warm caches before user traffic arrives.

- Performance Validation Gates: Use synthetic monitoring performance metrics as deployment gates, rolling back deployments that don’t meet performance thresholds after warming.

- Multi-CDN Synchronization: For multi-CDN architectures, synchronize warming across all providers to ensure consistent performance regardless of which CDN serves a particular user.

Global Scale Optimization

For truly global operations, specialized techniques can optimize warming across diverse geographic regions:

Regional Prioritization Framework

Implement a framework for prioritizing regions based on business impact:

- Market Value Weighting: Allocate warming resources proportionally to the business value of each market, ensuring critical regions receive comprehensive coverage.

- Growth Market Acceleration: Increase warming investment in high-growth markets to support expansion strategies with optimal performance.

- Event-Based Adjustment: Temporarily increase warming in regions hosting major events or promotions to handle anticipated traffic spikes.

Multi-CDN Warming Strategies

For organizations using multiple CDN providers, implement coordinated warming strategies:

- Provider-Specific Optimization: Tailor warming approaches to the specific caching behaviors and edge architectures of each CDN provider.

- Cross-Provider Redundancy: Implement warming redundancy across providers to ensure content remains warm even if traffic is shifted between CDNs.

- Performance-Based Routing Preparation: Warm all CDNs equally to ensure readiness for performance-based traffic routing between providers.

Resource Optimization at Scale

At enterprise scale, optimizing resource usage becomes critical to maintaining cost- effectiveness:

Tiered Warming Strategies

Implement tiered approaches that balance comprehensive coverage with resource efficiency:

- Critical Path Saturation: Provide saturated coverage (frequent warming from all locations) for the most critical resources that directly impact core user experience.

- Functional Representation: For secondary content, use representative sampling that warms a subset of resources to represent entire functional categories.

- Long-Tail Rotation: For extensive content libraries, implement rotational warming that cycles through long-tail content over time, ensuring eventual warming while managing resource consumption.

Distributed Execution Architecture

Implement distributed architectures for executing synthetic monitoring at scale:

- Edge-Based Execution: Deploy synthetic monitoring agents at the edge to reduce network latency and more accurately simulate real user conditions.

- Load Distribution: Distribute warming load across time windows to prevent monitoring traffic from creating its own performance issues.

- Regional Execution Clusters: Deploy regional execution clusters that handle warming for geographically proximate edge locations, optimizing network efficiency.

Advanced Monitoring and Analytics

Sophisticated monitoring and analytics capabilities are essential for optimizing enterprise- scale warming strategies:

Comprehensive Performance Dashboards

Implement dashboards that provide holistic visibility into warming effectiveness:

- Global Heat Maps: Visualize performance metrics on geographic heat maps to identify regional variations and optimization opportunities.

- Correlation Analysis: Analyze correlations between warming activities and performance improvements to quantify ROI and optimize strategies.

- Trend Analysis: Track performance trends over time to identify gradual degradation or improvement and adjust strategies accordingly.

Anomaly Detection and Alerting

Implement systems to identify and respond to warming failures or performance anomalies:

- Pattern Recognition: Use machine learning to identify abnormal performance patterns that may indicate warming failures or CDN issues.

- Predictive Alerting: Implement predictive alerting that identifies potential issues before they impact users, based on early warning signs in synthetic monitoring data.

- Automated Remediation: Develop automated remediation workflows that respond to detected anomalies with targeted warming or configuration adjustments.

Case Study: Global Media Platform

A global media platform with over 50 million daily users implemented an advanced CDN warm-up strategy with these sophisticated techniques:

Initial Challenges:

- Massive content library (>500TB) with frequent updates

- Global audience across 190+ countries

- Multiple CDN providers with different caching behaviors

- Seasonal traffic spikes exceeding 10x baseline volume

Advanced Implementation:

- Deployed machine learning models to predict content popularity and optimize

warming priorities - Integrated warming with CI/CD pipeline for automatic post-deployment optimization

- Implemented tiered warming strategy with critical path saturation and long-tail rotation

- Developed cross-CDN warming orchestration to maintain consistency across providers

Results:

- Achieved 99.3% cache hit ratio across all regions

- Reduced origin traffic by 94% during peak events

- Decreased global performance variation to <5%

- Maintained sub-100ms TTFB for 99.7% of requests

- Reduced infrastructure costs by 32% through origin offloading

This case study demonstrates how advanced techniques can scale CDN warm-up strategies to meet the demands of even the largest global platforms while delivering exceptional performance and cost efficiency.

Future Directions in CDN Warming

The field of CDN warming continues to evolve with emerging technologies and approaches:

Edge Computing Integration

As edge computing capabilities expand, warming strategies will evolve to include:

- Edge Function Warming: Pre-executing edge functions to warm computational caches in addition to content caches.

- Edge Database Warming: Extending warming strategies to include edge-based databases and data stores.

- Compute-Intensive Preprocessing: Using edge compute to perform preprocessing during warming, further reducing latency for real users.

AI-Driven Optimization

Artificial intelligence will play an increasingly central role in warming optimization:

- User Behavior Prediction: Using AI to predict not just what content will be popular, but the specific navigation paths users will take, enabling precise journey-based warming.

- Autonomous Optimization: Developing fully autonomous systems that continuously optimize warming strategies without human intervention.

- Cross-Platform Intelligence: Implementing AI systems that coordinate warming across web, mobile, and emerging platforms to ensure consistent performance in omnichannel experiences.

These advanced techniques and future directions represent the cutting edge of CDN warm- up strategies, enabling organizations to deliver exceptional performance at global scale while optimizing resource utilization and cost efficiency.

Conclusion: Transforming CDN Performance Through Proactive Warming

Content Delivery Networks have revolutionized web performance by distributing content closer to users, but the inherent challenge of cold caches has remained a persistent limitation. As we’ve explored throughout this technical deep-dive, synthetic monitoring provides a powerful solution to this challenge, enabling organizations to transform their CDNs from reactive caching systems into proactive performance accelerators.

The benefits of implementing a comprehensive CDN warm-up strategy extend far beyond technical performance metrics. By eliminating cold cache penalties, organizations can deliver consistently excellent user experiences that directly impact business outcomes:

- Improved Conversion Rates: Faster, more consistent performance leads to higher conversion rates across all markets.

- Enhanced User Engagement: Reduced latency increases user engagement and content consumption.

- Stronger Brand Perception: Consistent global performance creates a premium brand experience regardless of user location.

- Reduced Infrastructure Costs: Higher cache hit ratios dramatically reduce origin server load and associated infrastructure costs.

- Improved Operational Resilience: Proactively warmed caches provide protection against traffic spikes and other operational challenges.

The implementation of CDN warm-up through synthetic monitoring represents a shift from reactive to proactive performance optimization. Rather than waiting for users to experience and report performance issues, organizations can proactively ensure optimal performance before users arrive. This proactive approach aligns perfectly with modern user experience expectations, where even milliseconds of delay can impact user satisfaction and business outcomes.

As web applications continue to grow in complexity and global reach, the importance of CDN warm-up will only increase. Organizations that implement sophisticated warming strategies will gain a significant competitive advantage through superior performance, reduced costs, and enhanced user experiences.

The journey to optimal CDN performance begins with a single step: implementing basic synthetic monitoring for your most critical content. From there, organizations can progressively enhance their strategies with the advanced techniques we’ve explored, scaling to meet the demands of even the most complex global operations.

By embracing CDN warm-up through synthetic monitoring, you’re not just optimizing a technical metric—you’re transforming the experience you deliver to every user, everywhere in the world.

References

- Kissmetrics, “How Loading Time Affects Your Bottom Line,” https://neilpatel.com/blog/loading-time/

- Cloudflare, “What is Edge Computing?,” https://www.cloudflare.com/learning/cdn/glossary/edge-server/

- Web.dev, “Web Vitals,” https://web.dev/vitals/

- Akamai, “Cache Hit Ratio: The Key Metric for Happier Users and Lower Expenses,” https://www.akamai.com/blog/edge/the-key-metric-for-happier-users

- Kinsta, “WordPress CDN — Improve Load Times By Up To 72% With a CDN,” https://kinsta.com/blog/wordpress-cdn/

- Dotcom-Monitor, “Optimize CDNs with Synthetic Monitoring,” https://www.dotcom-monitor.com/blog/optimize-cdns-with-synthetic-monitoring/

- Akamai, “Understanding Cache-Control Headers,” https://developer.akamai.com/blog/2020/09/25/understanding-cache-control-headers

- Google Developers, “Largest Contentful Paint (LCP),” https://web.dev/lcp/

- MDN Web Docs, “Stale-While-Revalidate,” https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Cache-Control#stale-while-revalidate

- Fastly, “Edge Side Includes (ESI) Language Specification,” https://www.fastly.com/documentation/guides/esi-use